AI may be dominating headlines, but one of the most important constraints on its future is still largely underappreciated: heat.

As GPUs and ASICs race past 1,500W and push toward 2,000W and beyond, cooling has become a defining factor in how much AI performance systems can actually deliver. Thermal limits now directly influence sustained token throughput, energy efficiency, water usage, deployment flexibility, and total cost of ownership.

Cooling isn’t just supporting AI anymore — it is increasingly shaping what’s possible. Cooling is critical to achieving the true potential that AI can deliver.

This shift is showing up everywhere: in hyperscale data centers on Earth, and even in the early planning for space-based data centers. Thermal management has certainly become mission critical for the terrestrial data centers and is driving discussions for space-based data centers, where thermal management becomes even more critical.

As Frore Systems Founder and CEO Seshu Madhavapeddy recently put it:

“Cooling has quietly become one of the biggest constraints on AI progress. As GPUs and ASCIs push into entirely new power regimes, the industry can’t rely on incremental improvements to legacy coldplates. LiquidJet was built from the ground up to match the thermal reality of modern AI silicon — not just to cool it, but to unlock what comes next.”

That perspective reflects a broader industry realization: cooling is no longer just an engineering task. It is now a first-order infrastructure decision.

Cooling Is Now a Performance Constraint

In modern AI systems, thermal limits increasingly dictate real-world performance.

When silicon junction temperature cannot be kept within safe operating limits, systems throttle, efficiency drops, and the theoretical gains of next-generation GPUs and ASICs turn into diminishing returns. The challenge isn’t theoretical — it’s operational.

Cooling decisions now directly affect:

- Sustained performance and uptime

- Energy efficiency and operating costs

- Water usage and infrastructure demands

- Rack density and deployment flexibility

- Long-term total cost of ownership

In short, cooling is no longer about preventing failure. It is about enabling scale.

Why Legacy Cooling Architectures Are Hitting a Wall

Traditional coldplates were designed for a different era of compute. Power levels were lower, heat distribution was more uniform, and hotspot densities were manageable with relatively simple thermal approaches.

That world no longer exists.

Modern AI accelerators feature highly localized hotspots, complex power maps, and aggressive performance targets that legacy cooling architectures simply weren’t built to handle. As a result, data center operators are being forced into uncomfortable tradeoffs: throttling performance, overbuilding infrastructure, accepting inefficiencies that drive up cost and environmental impact, or contemplating expensive overhaul of infrastructure to support two-phase cooling.

The issue isn’t execution.

The issue is architecture of the current coldplates.

A Different Approach to Cooling — Built for Modern AI

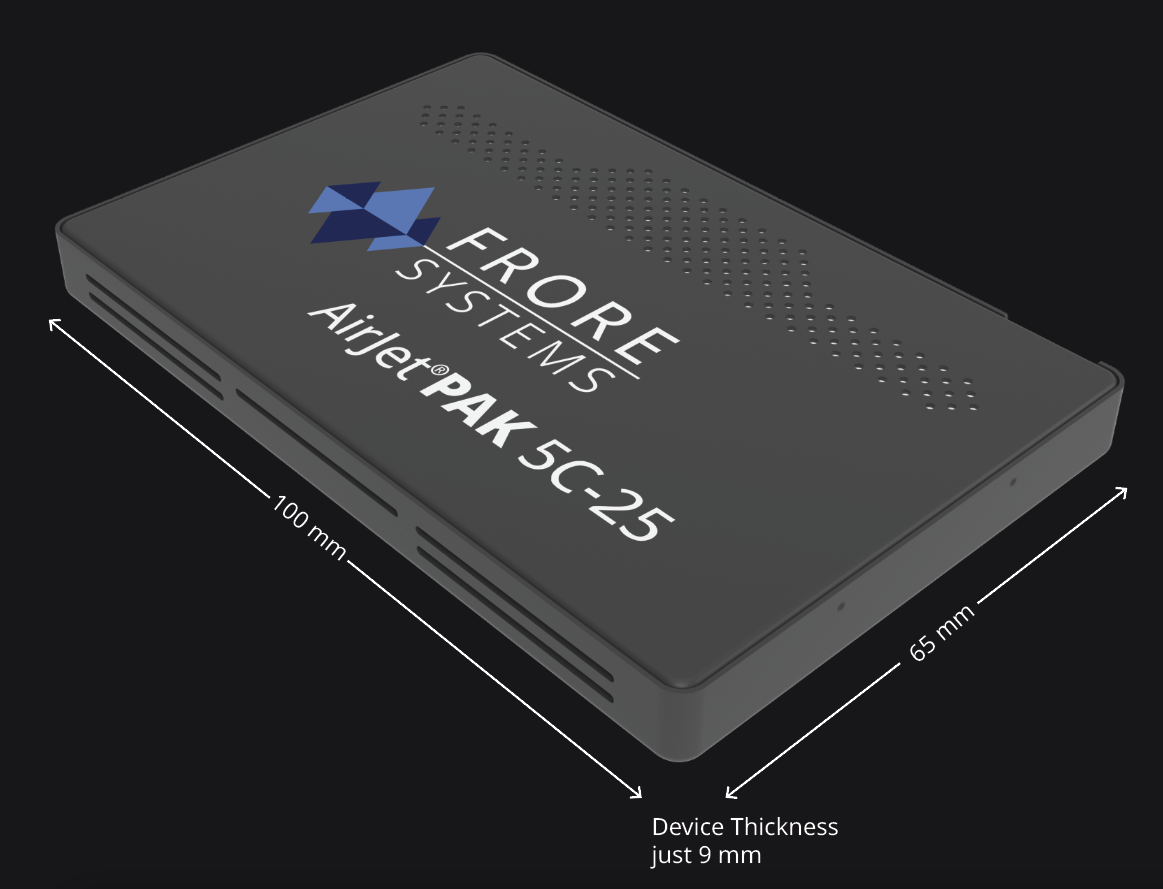

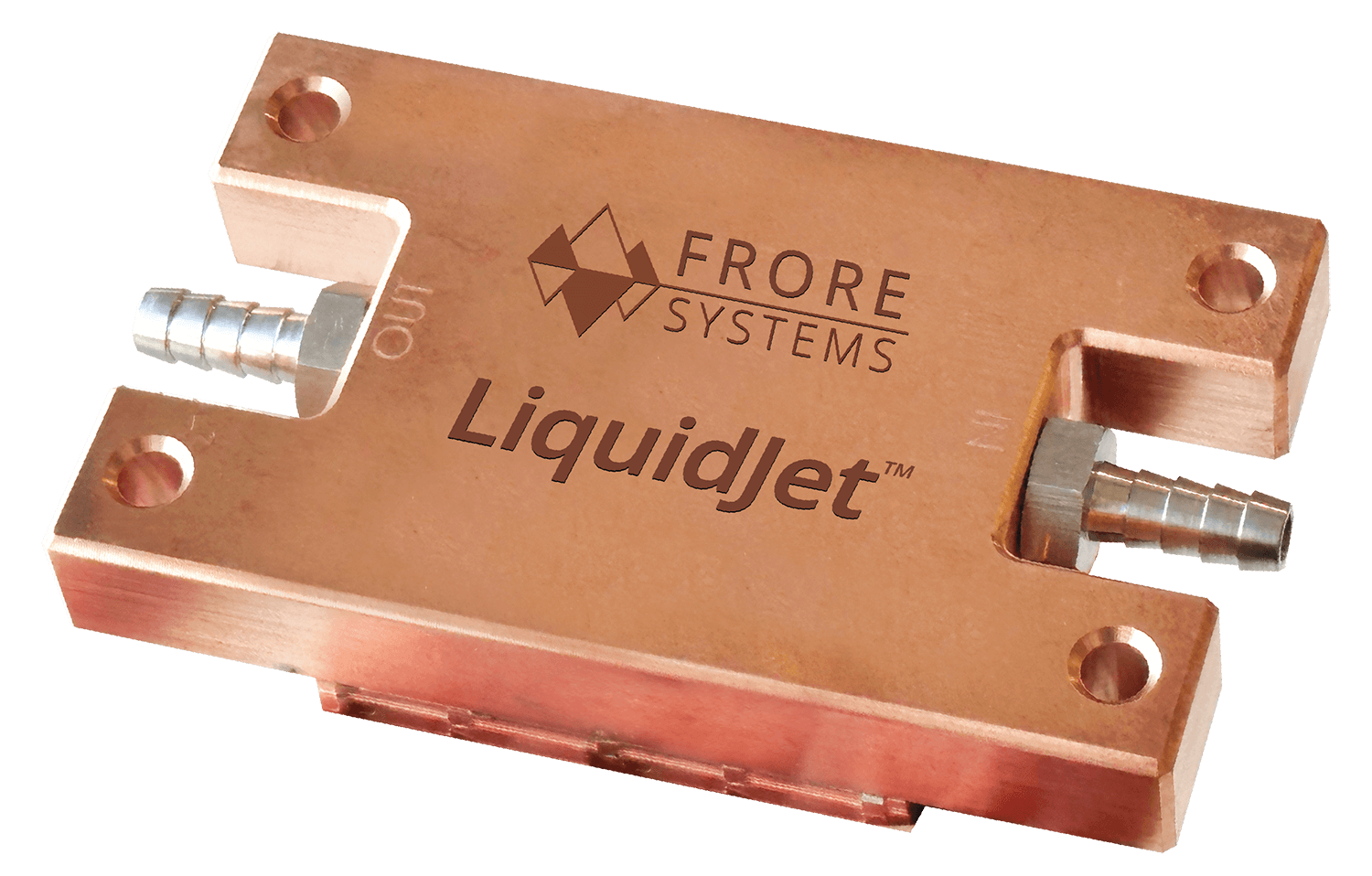

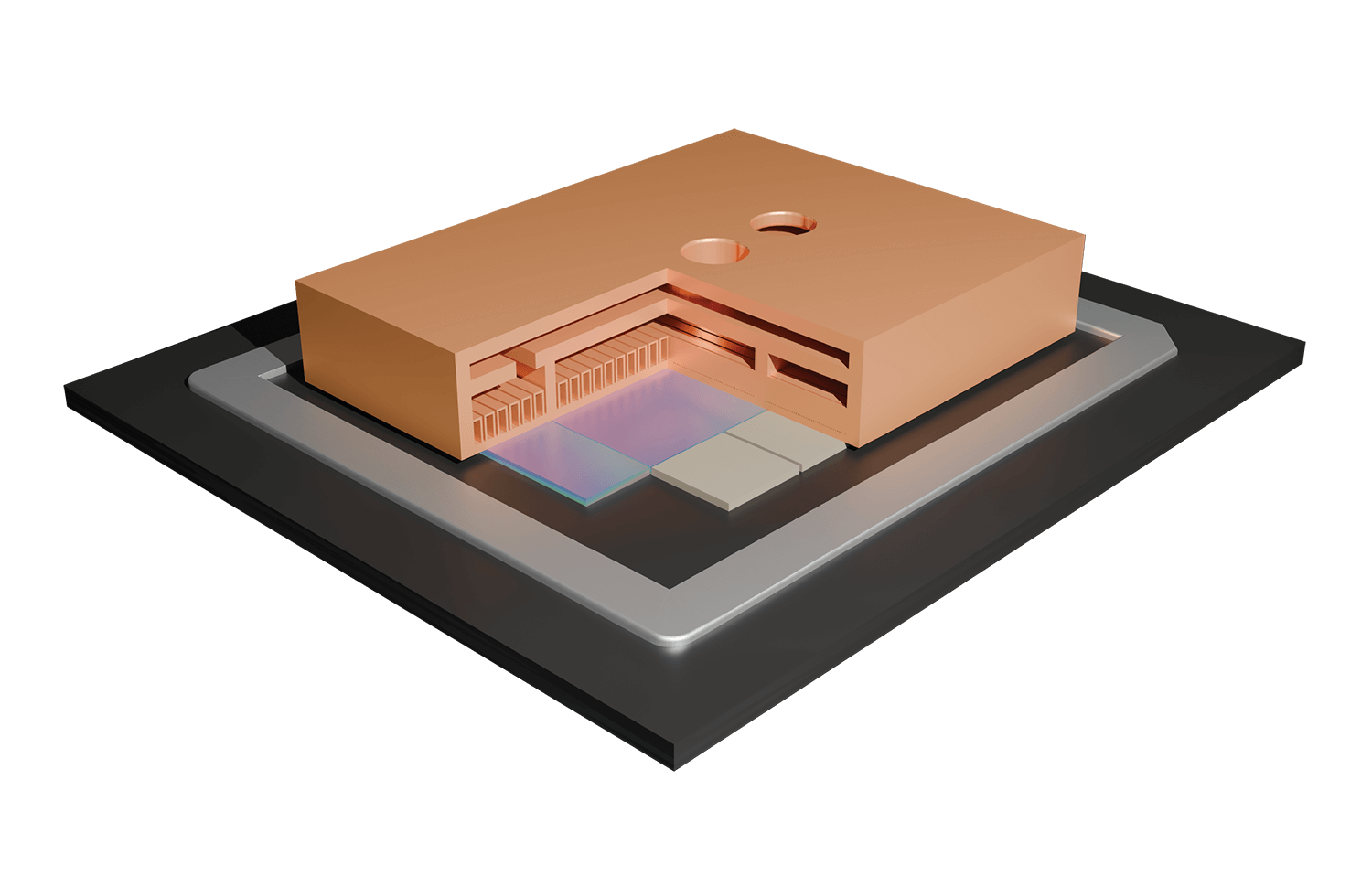

LiquidJet™ takes a fundamentally different approach to cooling—one designed for the realities of modern AI silicon and modern data center economics.

Rather than adapting legacy coldplate designs built for lower-power, more uniform heat profiles, Frore Systems applies semiconductor-style manufacturing techniques to it groundbreaking cooling solution. Using metal wafers, 3D short-loop jetchannel microstructures, multistage cooling, and hybrid 3D cell architectures, LiquidJet can be precisely tuned to match the actual heat maps of today’s GPUs—not just their average power levels.

This enables cooling performance to scale with where and how heat is generated, allowing higher sustained performance without forcing operators to overbuild or redesign their facilities.

Just as importantly, LiquidJet delivers this capability without requiring expensive infrastructure changes. It is designed as a drop-in, direct-to-chip upgrade that works within existing liquid cooling loops and data center environments.

Operators can unlock more performance from current systems—higher power envelopes, denser deployments, and improved efficiency—without major retrofits, new plumbing architectures, or long deployment cycles.

In an era where compute is advancing faster than facilities can be rebuilt, this approach matters. LiquidJet allows data centers to extend the value of existing infrastructure while keeping pace with the next generation of AI accelerators.

Cooling doesn’t have to slow AI down—or make it prohibitively expensive to scale. It just has to be built for what AI has become.

As Seshu explains:

“Traditional coldplates were never designed for the hotspot profiles we’re seeing in today’s AI accelerators. LiquidJet applies semiconductor-style manufacturing to cooling itself, allowing us to precisely target where heat is generated. That shift is what enables the performance, efficiency, and scalability AI infrastructure now demands.”

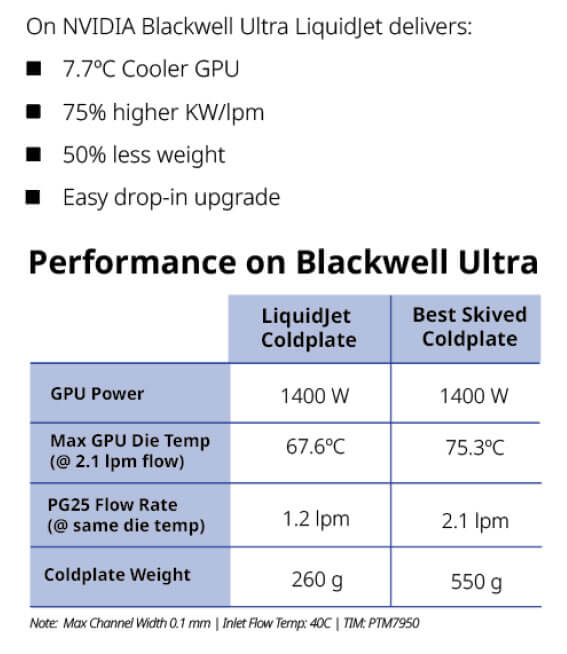

Recent LiquidJet demonstrations on next-generation GPUs — including Blackwell Ultra and Rubin-class architectures — reinforce a growing conclusion across the industry: cooling innovation must evolve alongside silicon innovation.

Blackwell Ultra Results

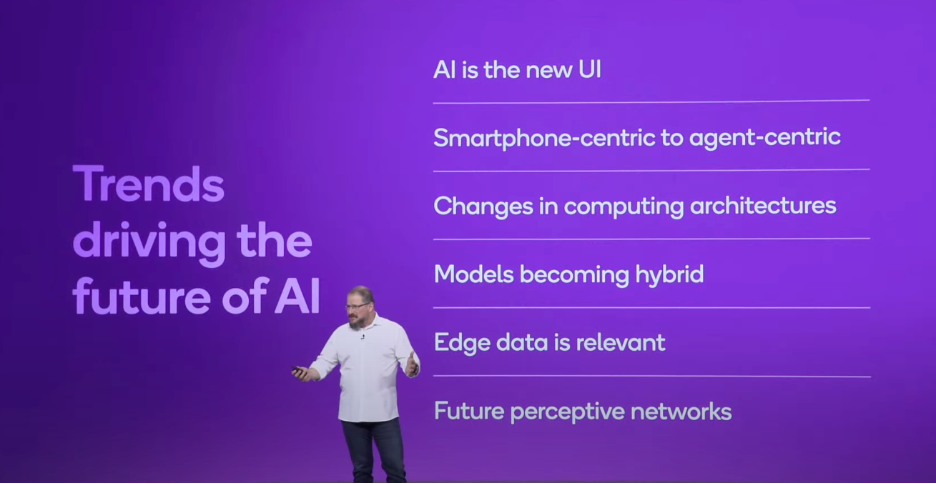

Cooling Is Becoming a System-Level AI Decision

The larger story here extends beyond any single cooling technology.

Cooling is no longer a component choice made later in the design cycle. It is a system-level decision that influences:

- How AI platforms are architected

- Where they can be deployed

- How efficiently they can scale over time

As AI infrastructure expands — on Earth and beyond — the ability to manage heat intelligently, efficiently, and sustainably will increasingly define competitive advantage.

In the AI era, cooling is critical.

It is a strategic decision — and one of the most important choices shaping the future of AI infrastructure.

Cooler GPUs and ASICs→ Higher sustained performance → More AI capability